A CONVERSATION WITH

David A. Sprott

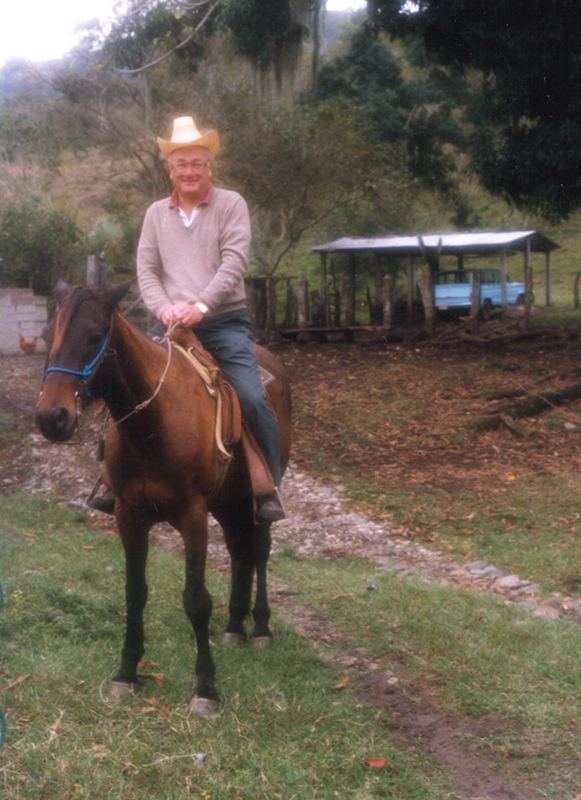

David Sprott in 1990

photo by Gwen Sharp

Veracruz, Mexico, February 1986

Münich, 1985

photos by R. Viveros Aguilera

David A. Sprott was born in Toronto in 1930. He obtained the B.A. in 1952, the M.A. in 1953 and the Ph.D. in 1955 from the University of Toronto. In 1955-56 he was a Research Assistant at the Galton Laboratory of the University of London, England. He then returned to the University of Toronto in 1956-8 as a Biogeneticist and Clinical Teacher in the Department of Psychiatry. He joined the University of Waterloo in 1958, was promoted to Professor in 1961, and became the first Dean of its Faculty of Mathematics in 1967. He also served as the first Chairman of the Department of Statistics from 1967 to 1975. Professor Sprott has held Visiting Professorships at the University of Saarland (1965), the University of Essex (1969-70), and the University of Munich (1975). He is a Fellow of the American Statistical Association, a Fellow of the Institute of Mathematical Statistics, a Member of the International Statistical Institute, and a Fellow of the Royal Photographic Society. In 1975 he was elected a Fellow of the Royal Society of Canada. In 1988 he was awarded the Gold Medal of the Statistical Society of Canada.

The following excerpts from a conversation recorded December 19, 1988 at the University of Waterloo originally appeared in Liaison Vol. 3, No. 2, February 1989.

L = Liaison, S = Sprott

L. How did you get into Statistics in the first place?

S. My original intention was to be an actuary. I had excelled in mathematics at high school, so there was no doubt I’d go into mathematics: Mathematics, Physics, and Chemistry. It was supposed to be the hardest course. And I think it would be, for people who couldn’t do mathematics, but if you could, it was simple to get a first. I had gone in on a scholarship, and maintained it, much to the astonishment of some of the professors there. But I became progressively disenchanted with the subjects. The first year did me in in chemistry: I thought that a fatuous bunch of philosophical nonsense. The same thing happened in physics in the second year. In third year it became the turn of pure mathematics. And so I think like a lot of people I got into statistics by default. There was nothing left.

I was intending to be an actuary primarily because I thought they made money, but the insurance company turned me off that. The first summer I spent at the National Life I was multiplying a by 1.025 and adding it to b for two months on one of those old desk calculators. And the second year — I guess I couldn’t believe it so I went back for a second dose — the second summer was even worse, since they now had an IBM computer, and I spent the whole summer reading printouts to see where the computer had made a mistake. But the real reason I didn’t become an actuary is that I could never pass Part 3 of the actuarial exams, statistics and probability. I kept failing it over and over again, and I decided I might as well quit that. There seemed to be nothing else to do but go on to graduate work. In statistics.

As a matter of fact, the trouble was I didn’t want to work. I thought the university and its atmosphere were sufficiently lethargic to appeal to me. Ralph Stanton was my supervisor, so I took a lot of courses from him. That’s when I first went through Feller. The first edition of his book had just come out, and we went through it in a graduate course. Then from studying Fisher’s Design of Experiments I became interested in combinatorics, and of course Stanton was quite good at that sort of thing, so we read the papers of Bose. The issue of confounding arose. That was obviously a combinatorial problem involving group theory; and Bose’s works involved finite projective geometries and then more generally configurations like balanced incomplete block designs and partially balanced ones and difference sets. It was amazing, I think, the amount of mathematics that this entailed. I ended up reading some algebraic number theory, trying to see how some of this was done. And one of the most beautiful theorems I’ve seen was Shrikhande’s (1) demonstration that for a symmetrically balanced incomplete block design, which means number of varieties = number of blocks, r – l has to be a perfect integral square: r is the number of replications, the number of times a treatment is applied, and l is the number of times each pair occurs in a block, so that the design is balanced for having equal variances between pairs of treatments. I found that interesting, and also Bose’s theorems on difference sets, and generating them by successive adding to initial blocks.

Working in this area was really quite fulfilling because you could actually see what you’d done. Nobody could say that you hadn’t done it, or that you should have done it another way. It was not like statistical inference: the refereeing was much more straightforward. I sometimes wonder if I shouldn’t have stuck to that! Anyway, I wrote a thesis on incomplete block designs, and derived with Stanton a way of getting a new series of difference sets which I think is still cited (2).

I also took an outside minor in genetics. I had to. That was one good thing the university did, because Stanton was prepared to give me an outside minor but the department ruled that out. They said an outside minor had to be outside. So I went to Len Butler in the biology department. Much to my astonishment he welcomed me with open arms. Biologists tended to be rather defensive where it came to statistics, but he never was. He said I should take the undergraduate fourth year course, and also a graduate seminar course. And I said I hadn’t had any biology, how could I take a graduate course in biology, and he said I’d probably be the only one who would understand it!

The undergraduate course was a bit difficult to get on to at first, but as soon as you got the laws of Mendel and segregating genes it became simple. And it soon became apparent why Butler had said I was the only one who would understand the graduate course. It was out of Mather’s book on the measurement of linkage, and it turned out to be straight maximum likelihood. It has become clear to me now that Fisher had really developed maximum likelihood for straight application to genetics. That was the problem that gave rise to it, I’m sure. And I think geneticists are possibly the only people now who really do understand maximum likelihood. If you want a decent write-up on maximum likelihood, you have to go to a genetics book to get it. You won’t get it in any of the statistics books from the point of view of somebody who wants to use it.

In that course we each had to give lectures, and I was down to read something by Lush or some such person on coefficients of something or other. And Butler saw that I had in front of me The Theory of Inbreeding, by Fisher (3). He asked if I understood it, and I said I understood it far better than the thing I was supposed to give a lecture on. He said, “All right, if you want to give a lecture on that, that’ll do.” So I ended up giving three or four lectures on the theory of inbreeding, in which the professor himself sat in the class taking notes. I understand that in the following year in the undergraduate course he introduced the notion of a transition matrix for a Markov chain, which was the basic model in the theory of inbreeding. In fact a lot of what you see in Markov chains and stochastic processes derived impetus from genetics. The spread of a gene through a population is an example of a branching process which appears in Feller, and you’ll see that example in Fisher’s book, The Genetical Theory of Natural Selection. Along with the standard treatment of inbreeding. It was clear that Fisher didn’t have any of the theory of Markov chains, because he developed this treatment afresh.

L. What did you do after the Ph.D.?

S. Through Butler and Stanton I got an NRC postdoc to study at the Galton Lab under Penrose. He was an MD and FRS, working in human genetics. I spent a year there. At that time the big issue was the consequences of background radiation in nuclear testing. It involved some controversy about the forces in evolution. Fisher had shown that if a heterozygote Aa is superior to either of the homozygotes (AA and aa), then even the recessive “bad” gene can be kept in stable equilibrium without any mutation rate at all. A question which they were working on was to find the condition under which three alleles could be kept in stable equilibrium. Sickle-cell anaemia in Africa was an example of genes that seemed to exhibit this particular feature. It turned out that the condition was rather simple, positive definiteness of the matrix of selection coefficients, and that it applied in general to the case of k alleles (4).

At that time the NRC postdoctoral scholarship amounted to something like $1800, plus travel. And it was quite sufficient. I actually lived off that money.

L. You then returned to Toronto.

S. Yes, it was through Penrose and Sheppard, the actuarial professor, who knew Stokes, the head of psychiatry, that I came back in the position of clinical teacher of psychiatry at the University of Toronto. I think this experience must have contributed a great deal to my outlook on science and statistics, because I could see what their researchers were doing, and I had a feeling for what they ought to be doing, and it seemed to me that the statisticians weren’t answering the right questions. In the favourable case these people had large amounts of equipment and had spent years collecting data, and they wanted to make a statement. They weren’t interested in hearing, “Well, if you did this in the long run this would happen.” They wanted to know what they could say now.

The year I was in England Fisher had published his paper, “Statistical Methods and Scientific Induction” (5), and it was trying to understand this paper which really aroused my interest in statistics. The course I’d had, the degree I’d got in statistics, had not actually done this. I was interested in experimental design from the combinatorial point of view, but the logic of inference had never penetrated. Though I do remember a case when in the fourth year somebody brought me a practical problem in regression in which the x’s were uncontrolled, and it had occurred to me, following rigorously the theory I had been taught, that you should allow for the variation in the x’s by taking the joint distribution of the x’s and y’s; and the statistics professor said “No, you wouldn’t do that, you’d condition on the x’s,” and I said, “But why? The course you’ve given us wouldn’t say you should do that at all.” And he said “No, but that’s what you’d do.” Well that’s what Fisher said too, but he was saying why you would do it. And while nothing I’d learned would contradict what he said, I was finding out here the logic behind it. The combination of that with what I saw going on in the psychiatric hospital, along with many interesting discussions with Dan DeLury at this time, deeply influenced my views on science, measurement and inference.

I remember one telling statement Fisher made about the Behrens-Fisher test when Bartlett said there is no logical reason to regard S1/S2 as fixed (6). Fisher said that, whatever Bartlett might mean by that, the observed value of S1/S2 was known to the experimenter as a fact and all other values are known not to be facts. That struck me as being true. There is a difference between observing something and not observing it, particularly if (like the psychiatric researchers) you’ve paid about $100,000 and spent five years doing it. To be told you should average over it in a sequence of similar trials seems far-fetched.

L. What sort of data did they actually have at the psychiatric hospital?

S. Terrible data. As far as I could see everything was totally subjective. As an example, they were interested in a disease called periodic catatonia. They had five physiological measurements being taken on patients for a period of about five years. One of these was called the basal metabolic rate, the BMR, which you were supposed to measure at about 6 a.m., before the patient got excited. I looked over these data, and thought of using discriminant functions and multivariate analysis. But I found that over the five years they had only about four observations in which the vector of the five measurements was fully present. They had no notion of rigorously taking observations. But what was worse, at the end of the five years they found out that the lab technician responsible for taking the BMR was a religious fanatic, and she’d used that period in the morning to try and convert these people. Nobody knew what this had done to the basal metabolic rate!

Another researcher who invited me into his lab was interested in cyclical changes in metabolic rate. The blood comes very close to the surface of the nail at the cuticle, and if you put oil there and look at it through a microscope you can see the blood coursing around. If you stop the flow with a blood pressure gauge, the blood changes from red to blue as the haemoglobin is used up, and if you put a spectrometer there, the spectral lines of the haemoglobin disappear. He was measuring the length of time from putting the gauge on to when the lines disappeared. That was a measure of change in metabolic rate. Now I would have thought that would take a really decent piece of equipment. What he had was a thing like a magnifying glass which you might have bought at Woolworth’s. I looked through it and all I could see were my eyelashes. I couldn’t see any haemoglobin line. I couldn’t even see the nailbed.

When I plotted the observations there was a perfect sine curve. I said there was no point in doing a statistical analysis on that: it looked like a tuning fork. But who could believe measurements obtained with primitive equipment like that? Also, there was the possibility that was raised to me that these cycles were produced by the experiment. The point was that every two minutes he was putting on the blood pressure gauge, releasing it and taking the measurement. If you stimulate an organism like that periodically there’s nothing to say that the organism won’t end up by responding equally rhythmically without anything untoward going on. Conversations with DeLury had impressed on me the necessity of reproducible and objective observations, which was exactly what these weren’t.

That took me more or less up to the time I came here. I spent two years at the psychiatric hospital. Putting it that way I have to emphasize that I was in the control group. People would ask me what was the difference between the University of Waterloo and the psychiatric hospital, and the answer to that was that at the psychiatric hospital occasionally people did get better!

L. So you moved from one institution to another.

S. Yes. Stanton had come the year before. That was the year the universities took off. Primarily I think because the Russians had put up Sputnik around the earth and people decided they’d better do something about it. The university was the repository of all knowledge and the solution of all problems. So the big increases came in salaries. Also just around that time Waterloo was opening up, and Stanton, after much agonizing, left Toronto and came and set up a department here. And I came in the second year, I guess it was 1958. I came here as an Associate Professor with about $8,800. And Stanton said, “That’s a very high salary; you needn’t expect to get anything for a while.” Which was true, I didn’t.

It was about four or five years before I taught any statistics courses. They didn’t have any statistics then. I taught algebra and calculus mainly. Computer science barely existed. As an undergraduate, when I couldn’t stand the insurance company any more, I went and worked at the computing centre in Toronto, multiplying a by 1.025 and adding b. They had IBM equipment, which occasionally I got to use, and you had to wire the thing up yourself. Then when I was a graduate student they got one of the first great big electronic computers. It was a whole monstrous roomful of tubes, and it looked like a hospital, with white-gowned people going around nursing it. Fifty percent of the time was spent on maintenance, and if you wanted to use it you had to stay up all night. You had to program it in machine language which was in the scale of 32 read backwards. When you think that that thing didn’t have nearly the power of the PC on the desk here it’s really rather remarkable. But computing science as you know it today didn’t exist. It seems to me that most of the people who were hired early on as professors in Computer Science never studied computing science at a university.

Just as statistics as it’s taught now bears little resemblance to what most of us were taught at university. There’s no question in my mind that people who graduate from second year here know more statistics than I did when I was a graduate student at Toronto. Because a lot of the things that are in these courses now were topics of research then. I remember doing work here with Jim Kalbfleisch when he was a graduate student, puzzling out certain aspects of conditional inference which are now in the second-year book.

The courses I did give were strictly, I suppose you would say, applied. That’s all I ever liked about mathematics. In high school the day they substituted letters for numbers was the day I became a mathematician, because I couldn’t add. Just to let x be the unknown extends the scope of what you can do so tremendously, and that was what impressed me. And then the extension to calculus where you can use derivatives and integrals to solve problems that you couldn’t otherwise solve. But all that pure mathematics, continuity and the existence of derivatives left and right, all this “e, d” business we were introduced to in second year, I found singularly uninteresting. I did find complex variable theory interesting, but that was because you could do something with it.

L. You’re of the British tradition, I guess.

S. I guess that must be it. I remember having an argument along those lines with somebody from another university. He was arguing that the Americans got to the moon first because they had this modern mathematics which they didn’t have in Britain. And I said I thought that this was the biggest hogwash I’d heard. As far as I could see, what had got them to the moon were the laws of Newton, and the technology of miniaturization, which had nothing to do with mathematics. As far as I know the mathematics was essentially that of Gauss. Because I remember writing an article on Gauss in 1977 (7), and it was Ian McLeod who pointed out to me an article by Young in Cambridge, talking about recursive least squares, and saying that Gauss would be interested to know that a development of his least squares was being used on the Apollo mission. And then he gave the relevant formulae. And I remember being rather astounded, going back to read Gauss, to find that it wasn’t a development from Gauss because the same formulae were actually in Gauss’ work.

L. How did you come to begin teaching statistics?

S. Well, eventually there had to be courses in statistics. The first second-year course I can remember, it couldn’t have been the first one I gave, was in two sections. Behara was here then, and he gave one section and I gave another. My section had Zarnke and a number of the computer whizzes who, I think, eventually produced WATFOR with its fast turnaround times and for which we gained an enviable reputation. All I remember is that they all went into computer science, whereas Behara’s division all went into statistics, and it had in it Jack Kalbfleisch, Jerry Lawless, and others. Now the explanation I received for that was that their lab was supervised by Jim Kalbfleisch. I think I supervised my own or something like that, and scared them all out.

There must have been things that happened before that, and I can’t remember. I know Jim came here to get his Ph.D., again doing a combinatorial subject: he did something on the Ramsay’s numbers and edge colourings of graphs which was quite elegant. And was doing statistics on the side.

I must have been giving graduate courses almost before the undergraduate courses at that point. There was a big kafuffle at the time, with all kinds of memos being written as to whether or not we should institute graduate studies in Mathematics. There were a lot of people opposed to it, saying we were outclassed by Toronto and this and that, and that we should be focussing on our undergraduates. Stanton’s view was that we should get into graduate studies as soon as possible, and I think he was right. At any rate, all those memos were going around, the thing went through, and I was teaching a course in statistical inference out of Fisher’s book, Statistical Methods and Scientific Inference. Well, that was sort of a case of the blind leading the blind, I think, because the book was a relatively recent one and I had been ploughing through it trying to figure things out.

In third year there was a regression course, which I gave to the class which had Jack Kalbfleisch and Jerry Lawless and Neil Arnason and others of the same calibre. The trouble was that for that course I had to have linear algebra, because it was straight regression, and the multivariate normal distribution. I thought I had to have linear algebra anyway. I’ve come to realize now that you don’t need it, and I wouldn’t do it that way, but that was after seeing Barnard’s approach. I thought I had to do it then, and also I thought the linear algebra was reasonably elegant. But they hadn’t taken linear algebra. So I spent the first term teaching linear algebra and no statistics at all. And having done all that, the only interesting article I had ever seen in the Annals was on random orthogonal transformations and how you could get a reasonably elegant derivation of the Wishart distribution and the Bartlett decomposition using linear algebra. So I took that up in the course, with the firm statement I think beforehand, just to placate them, that I was not going to require it on the exam. But now the story’s gone around, as you’ve probably heard, that I taught the Wishart distribution in third year. That was the origin of that.

L. It’s good to have the record set straight!

S. I taught that group again in fourth year, 1965 I think it was. They took two courses from me, one in statistical inference and one in experimental design. Clif Young had come also, from McMaster, to get a Master’s degree. I apparently impressed Clif Young, because the only way I knew how to give confounding was through the use of projective geometries, and this seemed a little far out to anyone with a practical application in mind: pencils and planes in an abstract n-dimensional projective geometry on a finite field GF(p). But actually it was the only way I could see how you could delineate what it was that was confounded and link it with the analysis. In fact it’s the way I still have to look at it.

When the department was formed it essentially consisted of Greg Bennett, myself and Jim. And Mike Bennett, who was in actuarial science. I advised Clif not to stay around here to get a Ph.D., and I think I advised Jack not to stay around either. I didn’t feel confident, I still don’t actually, in supervising Ph.D.'s. I think its a tremendous responsibility that I never really wished to undertake. Because I haven’t got a problem I could present to a student and say, “Look, if you do this and work on it and get some answers there’s a Ph.D. in it.” The problems are more open-ended than that, and the point is, if I had a problem I’d have solved it myself if I could. If I couldn’t then I didn’t think they could be in a better position. As Barnard has said, to write a thesis in statistical inference requires a degree of maturity that most students don’t have. So I’m very wary of taking on students unless they know what they want to do and are prepared to spend a lot of time working on it.

At any rate, Clif did go, he went to Finney to get his degree, but Jack asked if I would supervise him, and I said “Yes, but you have to realize I don’t have a problem, I’m just puzzling things out”, and he said that was fine. That actually turned out to be quite good, because I was Dean then, and I’m quite sure I wouldn’t have written nearly as much without him.

L. That was around 1968?

S. Yes, and that was around the time Godambe came. He’d visited here before that, actually, in 1963 or 1964 when he was at Statistics Canada. And so it had come to my attention that there was some issue in sampling finite populations about balls and labels, and I couldn’t get to the bottom of it. I told my class (in statistical inference) that there’s something funny, it’s not what you think it is when you sample balls in an urn, you think you have a simple binomial distribution but somehow it evaporates and you don’t. You think you have a likelihood function, but somehow it evaporates and you don’t. And I said, “I don’t understand it; the expert who knows all about this is Professor Godambe, and I’ve invited him here to explain it to you.” So Godambe came to explain it, and the net result was success in one way and failure in another: Godambe’s still here. And I still don’t understand it!

L. You actually met Fisher, didn’t you?

S. Very briefly. Actually he was quite gracious to me. I met him first when I was a postdoc. That’s when his paper came out, Statistical Methods and Scientific Induction (5), and I wrote to him and asked for a reprint. He sent me a reprint along with a letter saying, “I assume you come from overseas since the lab you’re at is wholly sold on the Neyman-Pearson approach.” I showed this to Penrose who was rather annoyed and said he didn’t think they were sold on the Neyman-Pearson approach. At any rate Fisher invited me — if I were from overseas — to come and see him, which I eventually did. And I sat in on a lecture Fisher gave. He gave regular lectures in the School of Agriculture. I found this rather amusing. There must have been some statisticians at Cambridge, but he was giving lectures to the agriculturalists who probably didn’t understand a word he was saying. His lecturing style was horrible. I heard a lot of stories about that.

After the lecture he stuck me in the mouse labs with proofs from his book, Statistical Methods and Scientific Inference, which I hadn’t seen. He left me there all afternoon reading that, and I had a sort of vague talk with him.

The next thing that attracted his attention came up after his book came out. I was interested in the example in the book in which you had observations of two different kinds. You had a radioactive source which generated a continuous distribution, and you took a single observation from that, and you could generate a fiducial distribution of q and apply that to the likelihood function of the remaining sample which produced merely counts. Lindley in reviewing the book had given an example with two observations x and y where if you used x to get the fiducial distribution and the likelihood function based on y you’d get a different answer than if you did things the other way round. There was also a sufficient statistic, and you could get a fiducial distribution based on it which was different from either of the others, and I thought that would be the correct one. I was convinced that finding the first two distributions involved losing information somehow and that the intervals you’d get would be somehow longer. I worked a long time on that, and it suddenly occurred to me that the real justification lay in the fact of what you might call exchangeability or interchangeability of two observations of the same kind. You had an axiom there which it seemed to me would override any of the Kolmogorov axioms, and that was that observations which left the likelihood invariant should leave the answer invariant. That condition would rule out the first two distributions in Lindley’s example, because in these x and y ended up appearing asymmetrically. And I was able to show that if you impose the condition that applying Bayes’ theorem must give the same result both ways, you’d get the result obtained by using the sufficient statistic. I also mentioned that this did not imply a criticism of Fisher because obviously the observations in his example were not interchangeable. I submitted this to Series B and expected it would be resoundingly rejected. But it wasn’t. And it attracted Fisher apparently. I followed it up with another one, trying to generalize this notion of invariance in the likelihoods (8, 9).

Barnard wrote me a letter saying he had had the pleasure of refereeing my paper and that he was very much taken with my contribution to this field, and could I visit him in Imperial College. I accepted this invitation very gladly: I had already been influenced by his work since DeLury had told me that if I wanted to understand Fisher, I should read Barnard. And so I went to see him. We had discussions there, with Jenkins, Winsten and others. And then we took a trip to Cambridge.

Fisher had written the papers on sampling the reference set then (10, 11), which I didn’t understand. It’s only recently actually that I’ve come to understand them. He had a complicated way of getting the distribution of the variances when the means are equal, and I asked why can’t you condition the fiducial distribution of s1, s2 on q1 = q2 in the ordinary way? You get the same result he did; in fact I found out that way that he’d made an algebraic error, which he admitted. I remember him stroking his beard and saying, “Yes, that’s a very rational approach, but it doesn’t take into account how thin you slice the bacon.” When I was going back on the train with Barnard he said, “Did you understand Fisher’s comment about slicing the bacon?” I said, “Well, no, I don’t,” and Barnard said, “I think I do.” Actually it’s quite obvious when you come to think of it. The difficulty is not because of fiducial probability: you can get what appear to be paradoxes in ordinary probability if you condition on sets of measure zero. Because it really is a limiting procedure and the way you take the limit will affect the result. And that’s what Fisher meant about slicing the bacon.

That was the only other time I met him, because he died shortly after that. I’d never before perceived intellectual force to be almost like a physical force. He seemed somewhat frail at the end, you know, he walked with a cane, and he looked as though a little breeze would blow him over, but as soon as he opened his mouth he seemed to me to radiate force.

Although I met him only briefly, I have a longer correspondence with him. Barnard refers to these letters in his article on Fisher as a Bayesian in the ISI Review (12). They were evidence that Fisher was revising his notions of fiducial probability, and I was someone he was trying them out on, because I had some correspondence with him in which I was pointing out the difficulties I had, that if you did this and did that which he suggested you ended up with a contradiction. But I didn’t use it to suggest he didn’t know what he was talking about. I used it to suggest that I’d obviously missed something, and he was writing back to tell me what he thought I’d missed. And he was always perfectly friendly in the letters.

L. In the last few years you’ve been spending part of the winter in Mexico. Could you tell us how that came about?

S. Well, back around 1965 my sister and her husband retired to Mexico. That’s how it really started, with a visit to them. They had looked around for a place to stay and happened to hit upon the region of Guanajuato. It makes a sort of isosceles triangle with Mexico City and Guadalajara. It’s up to the north in the beginning of the mountains, and it’s a silver mining area. The architecture’s quite exotic. An Italian architect designed all these fabulous haciendas around the ruins of the silver mines. If you get there in December or January as we will you’ll see red bougainvilleas as big as trees in bloom and humming birds. It’s like being in another world.

Guanajuato is a very old town like the European towns with crooked roads. To get through it one way the main street goes underground, subterranean all the way, which is quite a feat of engineering I would think. And the return route is above ground. You can never get a place to park. The whole town has been declared a national monument, so that you can’t change the architecture or anything. We stay in a little village, Marfil, about 6 km. away.

It had always been frustrating to me that we were always tourists, and I don’t like being a tourist. I certainly don’t like visiting a place when I can’t speak any of the language, so I took a six-week course in Spanish here in the summertime, which did a world of good actually. Then more recently we were blessed with a student from Mexico, Roman Viveros. I started talking to him about Mexico and where he was, and I got to know him reasonably well, and eventually ended up as his supervisor. He suggested I should try and speak Spanish to him for practice, so except when we were talking about his Ph.D. I tried to do this. Well, that helped a lot to actually develop my language, though it would never stay; I have to bone up on it every time I go.

I found out that, at Guanajuato, there is a research centre, CIMAT, which also offers courses through the University of Guanajuato. They seem to be quite an entrepreneurial group: their computing lab looks just like ours here. So I wrote there, and eventually got an answer back from the director of research. They’ve been very hospitable. CIMAT is part way up a mountain, right by a cathedral, originally owned by a nobleman. It’s on a square where buses stop regularly, and when you’re sick of working you go out and sit in the sun and watch all the tourists come and go. The lecture rooms are in the cloisters. It’s something like University College at Toronto actually, except that it’s in a somewhat different location! They’ve given me an office when I go, and I usually give a lecture there. In the last three or four years I’ve been lecturing in Spanish, or at least trying to.

So I give lectures at various places. Also I lie in the sun, thinking — or just lie in the sun. And I’ve used that period to write. It’s an excellent place to do this if you’ve thought more or less of what you want to write, because you’re alone, you’re not being bothered. This year I’ll be working on the lecture I’m supposed to give in Ottawa next May. And now I have a lap-top computer, which is fully as powerful as the PC with a 20 megabyte disk. I can take that with me and use the word processor — assuming I can find a place to plug it in. It’s a very pleasant place. It’s nice to get away from the snow and I always think Waterloo looks much better from a distance!

References

- Shrikhande, S.S. (1950). Ann. Math. Statist., 21, 106-111.

- Stanton, R.G. and Sprott, D.A. (1958). Can. J. Math., 10, 73-77.

- Fisher, R.A. (1949). The Theory of Inbreeding. Edinburgh: Oliver and Boyd.

- Penrose, L.S., Smith, S.M. and Sprott, D.A. (1956). Ann. Human Genetics, 21, 90-93.

- Fisher, R.A. (1955). J. Roy. Statist. Soc. B, 17, 69-78.

- Fisher, R.A. (1957). J. Roy. Statist. Soc. B, 19, 179.

- Sprott, D.A. (1978). Historia Mathematica, 5, 183-203.

- Sprott, D.A. (1960). J. Roy. Statist. Soc. B, 22, 312-318.

- Sprott, D.A. (1961). J. Roy. Statist. Soc. B, 23, 460-468.

- Fisher, R.A. (1961). Sankhya, 23, 3-8.

- Fisher, R.A. (1961). Sankhya, 23, 103-114.

- Barnard, G.A. (1987). Int. Stat. Review, 55, 183-190.